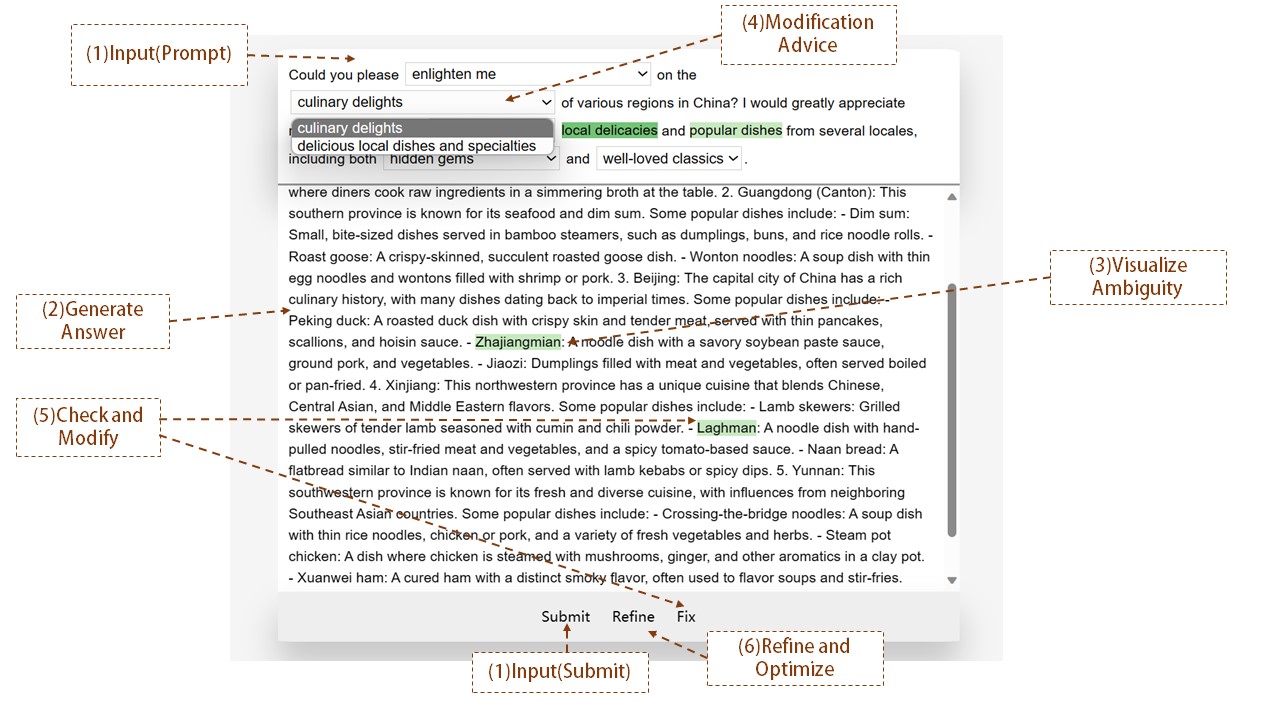

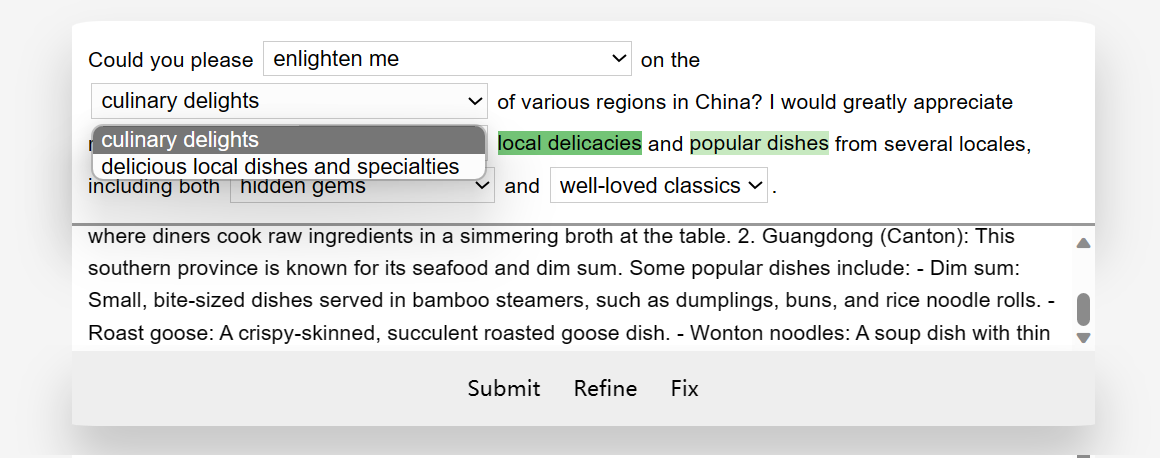

ProChatter is web-based user interface that leveraged human-AI collaborative editing to achieve effective querying with conversational AIs. Prochatter resolve ambiguity from the perspective of prompt engineering.

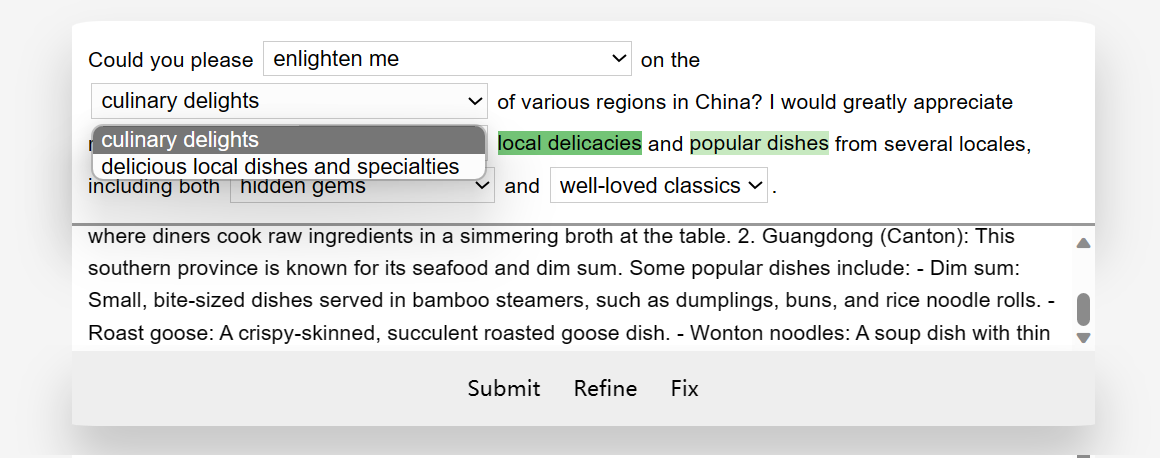

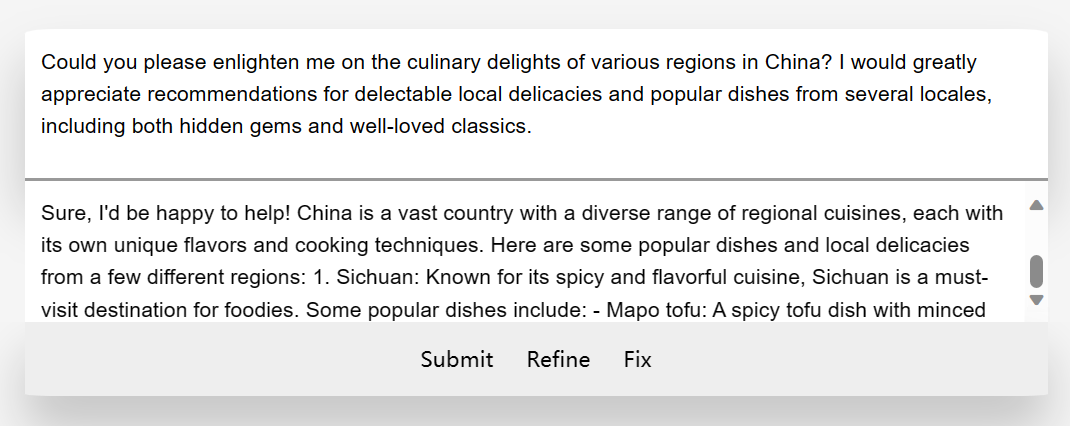

Functions' illustration of ProChatter. (1) Users would input the prompt and then clicked submit to let ProChatter generate the answer. (2) The ProChatter would generate the answer and then (3) would analyze and highlight the uncertainty in the output. After given the uncertainty highlight, the ProChatter would (4) present the modification suggestion. After giving the modification suggestion, (5) users could check and modify the result and then (6) refine and let ProChatter to optimize the answer.

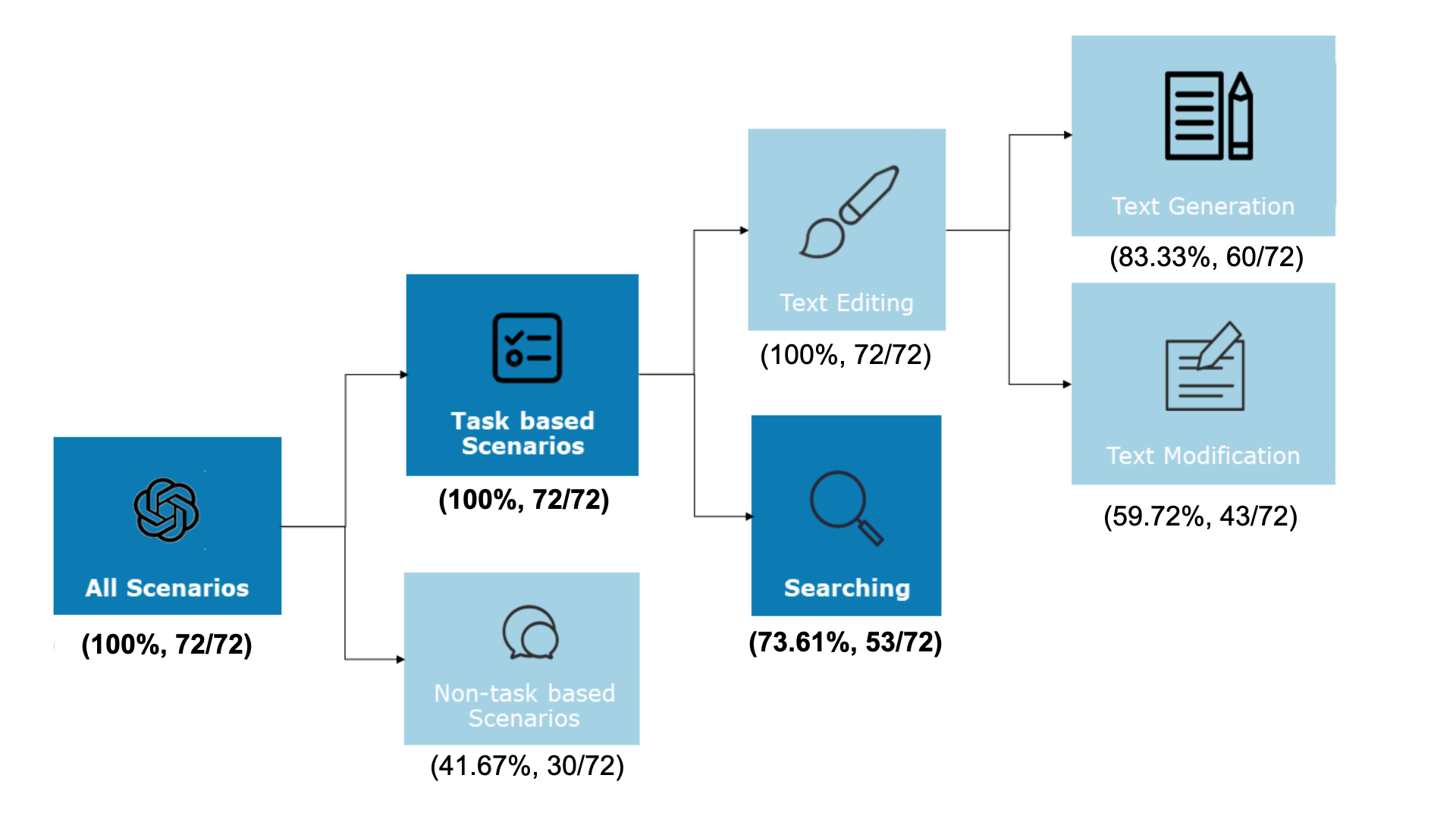

To find the pain point when using Conversational AIs (Powered by LLM), we first conduct interviews to explore the tasks of using LLM.

Scenarios of LLM and their frequency. The targeting scenarios were denoted in darker color.

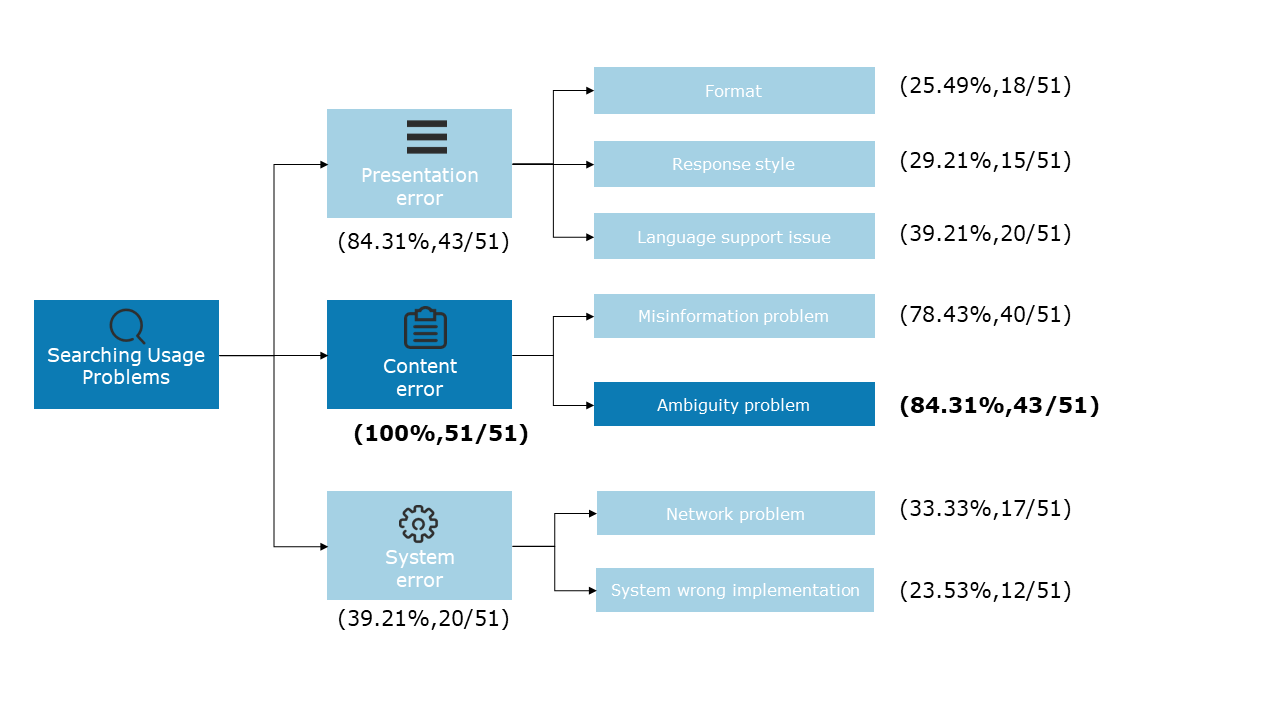

After identifying the diverse capabilities of LLM in task completion, it is noteworthy that certain users reported issues with LLM’s search functionality, along with the general consensus that prompting LLM can be challenging.

Problems of using LLMs and their frequency. The targeting problems were denoted in darker color.

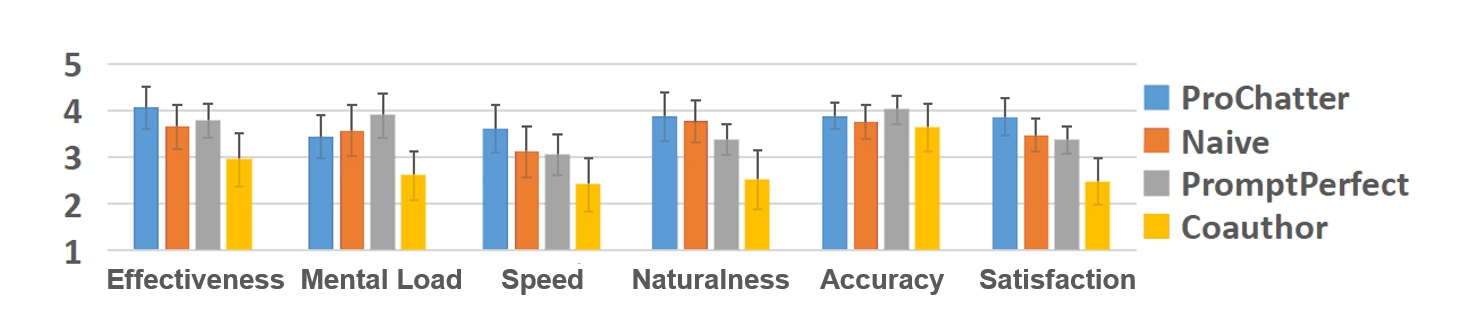

ProChatter outperforms baseline technologies includingPromtPerfect, Naive, and CoAuthor.

(a) ProChatter's interface. (b) PromptPerfect's interface.

(c) Naive's interface. (b) CoAuthor's interface.

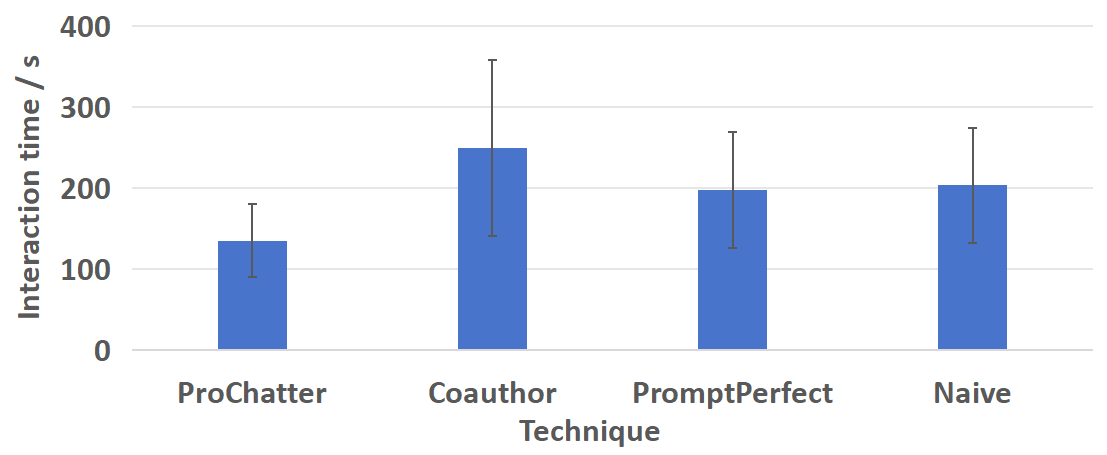

Prochatter has advantages in interaction time, statictics, answer quality, and subjective rating.

This bar graph uses different techniques as the horizontal axis and interaction time as the vertical axis. Error bar indicated one standard deviation.

| Iterations | Submit | Refine | Fix | Select | Modify | Link |

| ProChatter | 4.66(1.62) | 2.16(0.80) | 2.50(1.23) | 1.85(0.37) | 1.78(0.92) | 6.8(7.2) | 0.40(0.37) |

| CoAuthor | -- | 13.06(4.43) | -- | -- | -- | 35.6(35.8) | -- |

| PromptPerfect | 5.33(1.67) | 2.18(1.67) | 3.15(1.25) | -- | 0.65(0.37) | 11.6(13.4) | -- |

| NAIVE | 6.09(1.84) | 6.09(1.84) | -- | -- | -- | 16.8(18.3) | -- |

Statistical result for different techniques per question. For different entries the number was in times. Iterations meant the interaction rounds denoted by users clicking submit or refine button. Submit meant the times user clicked the submit button which implied users would initiate a new question. Refine meant the times user clicked the refine button which implied users would continue to refine on the old question for better answers. Fix meant the times user fixed some content in the output which. Select meant the times user wanted to select from the modification suggestion the corresponding technique gave. Modify meant the tokens or characters users modify on average per question during different submit and refine rounds. Link meant the times users viewed the correlation of ambiguity highlight between the answer and the input peer question. The number in the parentheses denoted one standard deviation.

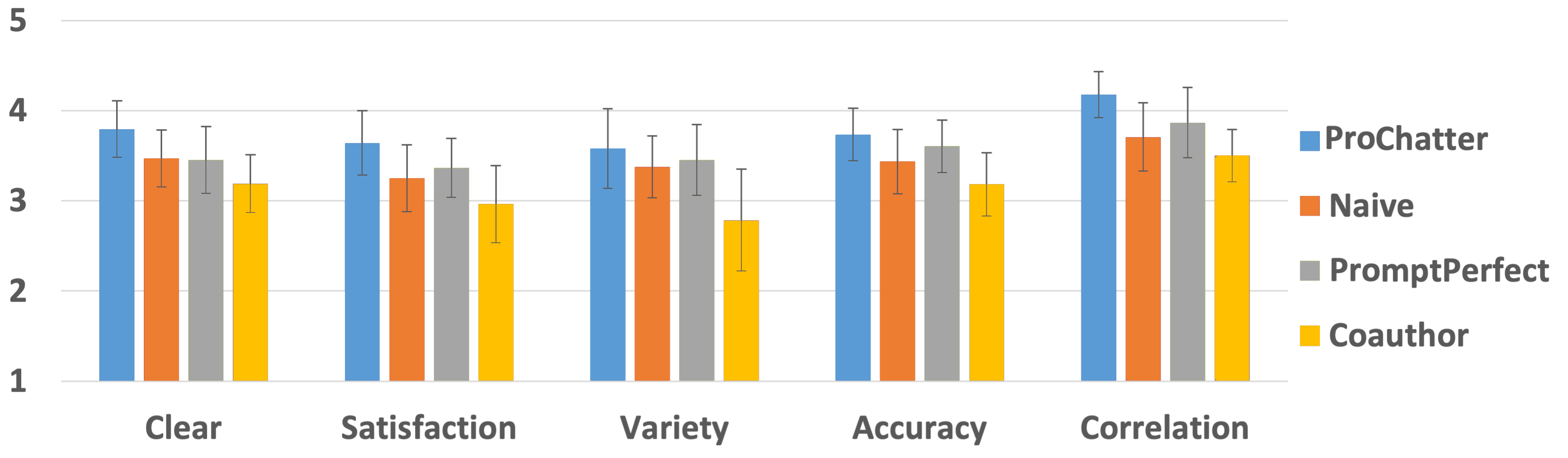

The subjective ratings of the answers from different perspectives (5: most positive, 1: most negative). Error bar indicated one standard deviation.

Subjective ratings comparing different techniques (5: most positive. 1: most negative). Error bar indicated one standard deviation.

We submitted our work to CSCW24’, and I am pround to have contributed ProChatter as the Undergraduate First Author.